Mechanisms

for localisation

It

is important for understanding the workings of

Stereophony that you are convinced that all three

mechanisms are significant and I would suggest, with

Keele, Snow, and Moir, that the Pinnae are first

among equals. You should satisfy yourself on some of

this by running water in a sink to get a nice complex

high frequency source. Close your eyes to avoid bias,

block one ear to reduce ILD and ITD, and see if you

can localize the water sound with just the one open

ear. Point to the sound, open your eyes, and like

most people you will be pointing correctly within a

degree or so. With both ears you should be right on

despite having a signal too high in frequency to have

much ITD or ILD. But with two pinnae agreeing and the

zero ILD clue, the localization is easily accurate.

Again, if a system like stereo or 5.1 cannot deliver,

the ITD, ILD and Pinna cues intact without large

errors it cannot ever deliver full localization

versimilitude for signals like music. If the cues are

inconsistent, localization may occur but it is

fragile, it may vary with the note or instrument

played, and such localization is usually accompanied

by a sense that the music is canned, lacks depth,

presence, etc. Mere localization is no guarantee of

fidelity.

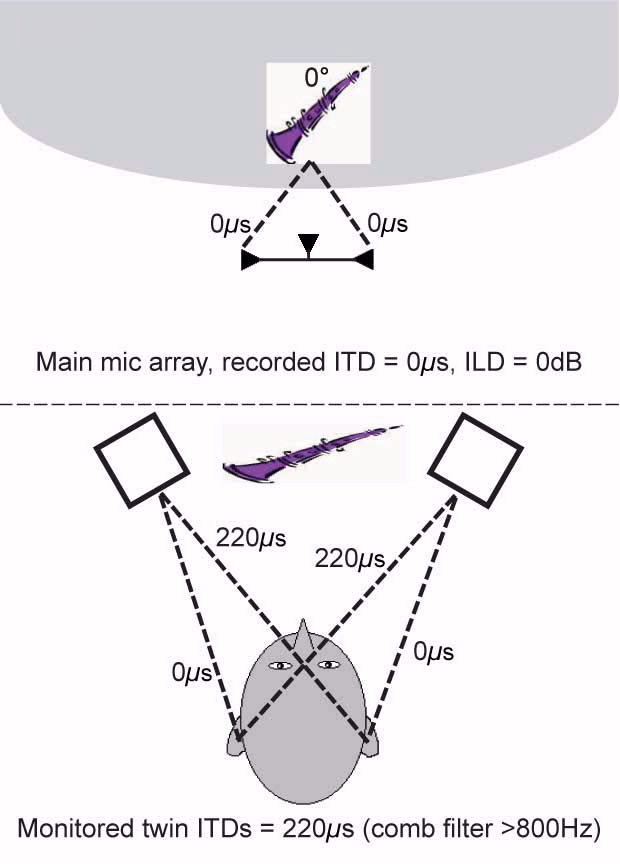

Let us now look at the stereo triangle in

reproduction and the microphones used to make such

recordings and see what happens to the three

localization cues. Basically Stereophonics is an

audible illusion, like an optical illusion. In an

optical illusion the artist uses two dimensional

artistic tricks to stimulate the brain into seeing a

third dimension, something not really there. The

Blumlein stereo illusion is similar in that most

brains perceive a line of sound between two isolated

dots of sound. Like optical illusions, where one is

always aware that they are not real, one would never

confuse the stereophonic illusion with a live

binaural experience. For starters, the placement of

images on the line is nonlinear as a function of ITD

and ILD, and the length of the line is limited to the

angle between the speakers. (I know, everyone,

including Blumlein, has heard sounds beyond the

speakers on occasion but diatribe space is limited.)

I want to get to the ILD/ITD phantom imaging issue

involved in this topic. But let us first get the

pinna issue tucked away. No matter where you locate a

speaker, high frequencies above 1000 Hz can be

detected by the pinna and the location of the speaker

will be pinpointed unless other competing cues

override or confuse this mechanism. In the case of

the stereo triangle the pinna and the ILD/ITD agree

near the location of the speakers. Thus in 5.1 LCR

triple mono sounds fine especially for movie dialog.

In stereo, for central sounds, the pinna angle

impingement error is overridden by the brain because

the ITD and the ILD are consistent with a centered

sound illusion since they are equal at each ear. The

brain also ignores the bogus head shadow since its

coloration and attenuation is symmetrical for central

sources and not large enough to destroy the stereo

sonic illusion. Likewise, the comb-filtering due to

crosstalk, in the pinna frequency region, interferes

with the pinna direction finding facility thus

forcing the brain to rely on the two remaining lower

frequency cues. All these discrepancies are

consciously or subconsciously detected by golden ears

who spend time and treasure striving to eliminate

them and make stereo perfect. Similarly, the urge to

perfect 5.1 is now manifest.

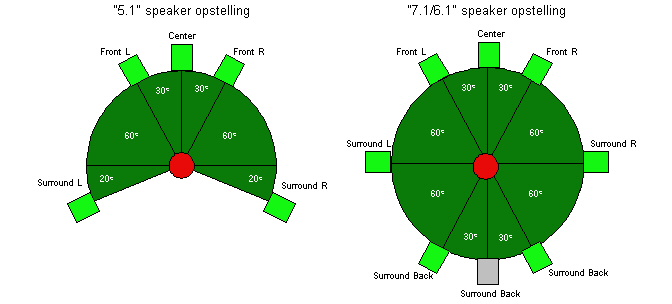

Consider just the three front speakers in 5.1. Unless

we are talking about three channel mono, we really

have two stereo systems side by side. Remember,

stereo is a rather fragile illusion. If you listen to

your standard equilateral stereo system with your

head facing one speaker and the other speaker moved

in 30-degrees, you won't be thrilled. The ILD is

affected since the head shadows are not the same with

one speaker causing virtually no head shadow and the

other a 30 degree one. Similarly the pinna functions

are quite dissimilar. (In the LCR arrangement the

comb-filtering artifacts now are at their worst in

two locations at plus and minus 15-degrees instead of

just around 0-degrees as in stereo) Thus for

equal amplitudes (such as L&C) where a signal is

centered at 15 degrees, as in our little experiment,

the already freakish stereo illusion is badly

strained. Finally, the ITD is still okay and partly

accounts for the fact that despite the center speaker

there is still a sweet spot in almost all home 5.1

systems. Various and quite ingenious 5.1 recording

systems try to compensate for some of these errors

but the results are highly subjective and even

controversial. It is also probably lucky that in 5.1

recording, it is difficult to avoid an ITD since a

coincident main microphone is seldom used in this

environment.

top

|

the real problem, shown in yet

another way...

|